Khi nào GPT-5 sẽ được phát hành?

Theo dự đoán, GPT-5 sẽ được phát hành vào tháng 11, có thể trùng với kỷ niệm 2 năm của GPT Chat. Đồng thời, chúng ta cũng sẽ chào đón các mô hình đột phá khác như Gemini 2 Ultra, LLaMA-3, Claude-3, Mistral-2.

Cạnh tranh trong lĩnh vực này ngày càng khốc liệt, điển hình là cuộc đua giữa Gemini của Google và GPT-4 turbo.

Rất có thể GPT-5 sẽ được phát hành theo từng giai đoạn, là các điểm kiểm tra trung gian trong quá trình đào tạo mô hình. Tổng thời gian đào tạo có thể kéo dài 3 tháng, cộng thêm 6 tháng để kiểm tra bảo mật.

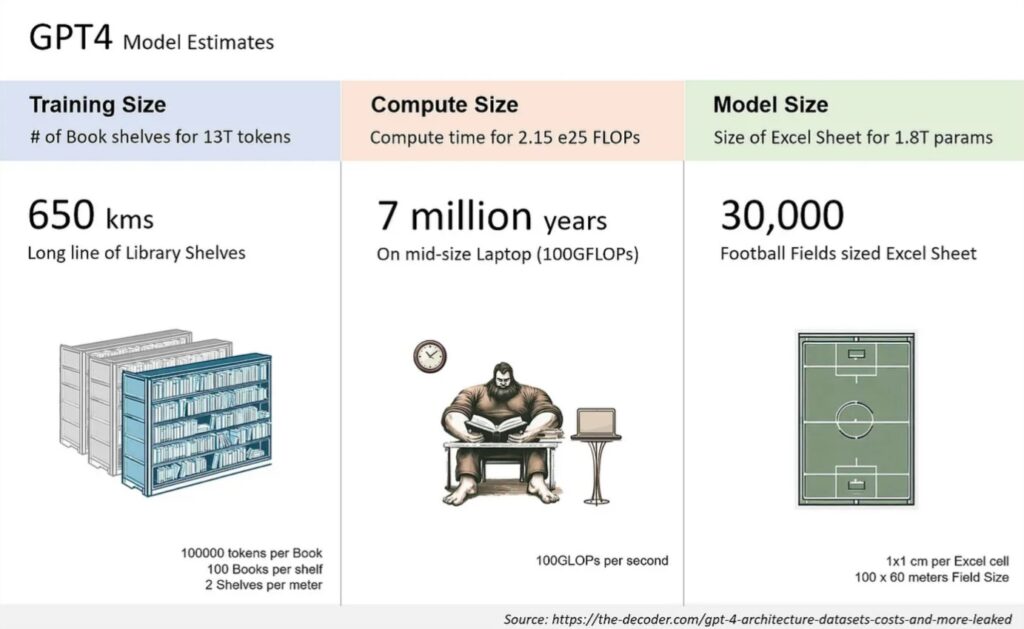

Để hiểu rõ hơn về GPT-5, chúng ta hãy cùng xem lại các thông số kỹ thuật của GPT-4:

Dựa trên hình ảnh, chúng ta sẽ thấy:

- Kích thước dữ liệu đào tạo: So với một dãy kệ thư viện dài 650 km, trong đó mỗi kệ đại diện cho 100.000 mã thông báo và tổng cộng là 13 nghìn tỷ mã thông báo.

- Yêu cầu tính toán:Kích thước tính toán cần thiết để đào tạo, ước tính là 2,15 nghìn tỷ (10^18) phép toán FLOP, được hiển thị mất 7 triệu năm trên một máy tính xách tay cỡ trung bình với hiệu suất 100 GFLOP/giây.

- Kích thước mô hình: Với 1,8 nghìn tỷ tham số, so với 30.000 bảng tính Excel có kích thước bằng một sân bóng đá cộng lại.

Bộ dữ liệu: GPT-4 đã được đào tạo trên khoảng 13 nghìn tỷ mã thông báo, bao gồm cả nguồn dữ liệu văn bản và mã, với một số dữ liệu tinh chỉnh từ ScaleAI và nội bộ.

Bộ dữ liệu hỗn hợp: Dữ liệu đào tạo bao gồm CommonCrawl & RefinedWeb, tổng cộng 13 nghìn tỷ mã thông báo. Có tin đồn rằng có thêm các nguồn khác như Twitter, Reddit, YouTube và một bộ sưu tập sách giáo khoa lớn.

Chi phí đào tạo: Chi phí đào tạo cho GPT-4 vào khoảng 63 triệu đô la, tính đến công suất tính toán và thời gian đào tạo cần thiết.

Chi phí suy luận: GPT-4 có giá cao gấp 3 lần so với Davinci 175B tham số, do nhu cầu về các cụm lớn hơn và tỷ lệ sử dụng thấp hơn.

Kiến trúc suy luận: Suy luận chạy trên cụm gồm 128 GPU, sử dụng song song tenxơ 8 chiều và song song đường ống 16 chiều pm.

Đa phương thức trực quan: GPT-4 bao gồm bộ mã hóa trực quan để các tác nhân tự động đọc các trang web, hình ảnh và phiên âm video. Điều này bổ sung vào các tham số trên và được tinh chỉnh với khoảng 2 nghìn tỷ mã thông báo khác.

GPT-5: GPT-5 hiện có thể có nhiều tham số hơn GPT-4 gấp 10 lần và điều này RẤT LỚN! Điều này có nghĩa là kích thước nhúng lớn hơn, nhiều lớp hơn và số lượng chuyên gia gấp đôi.

Tìm hiểu về GPT-5

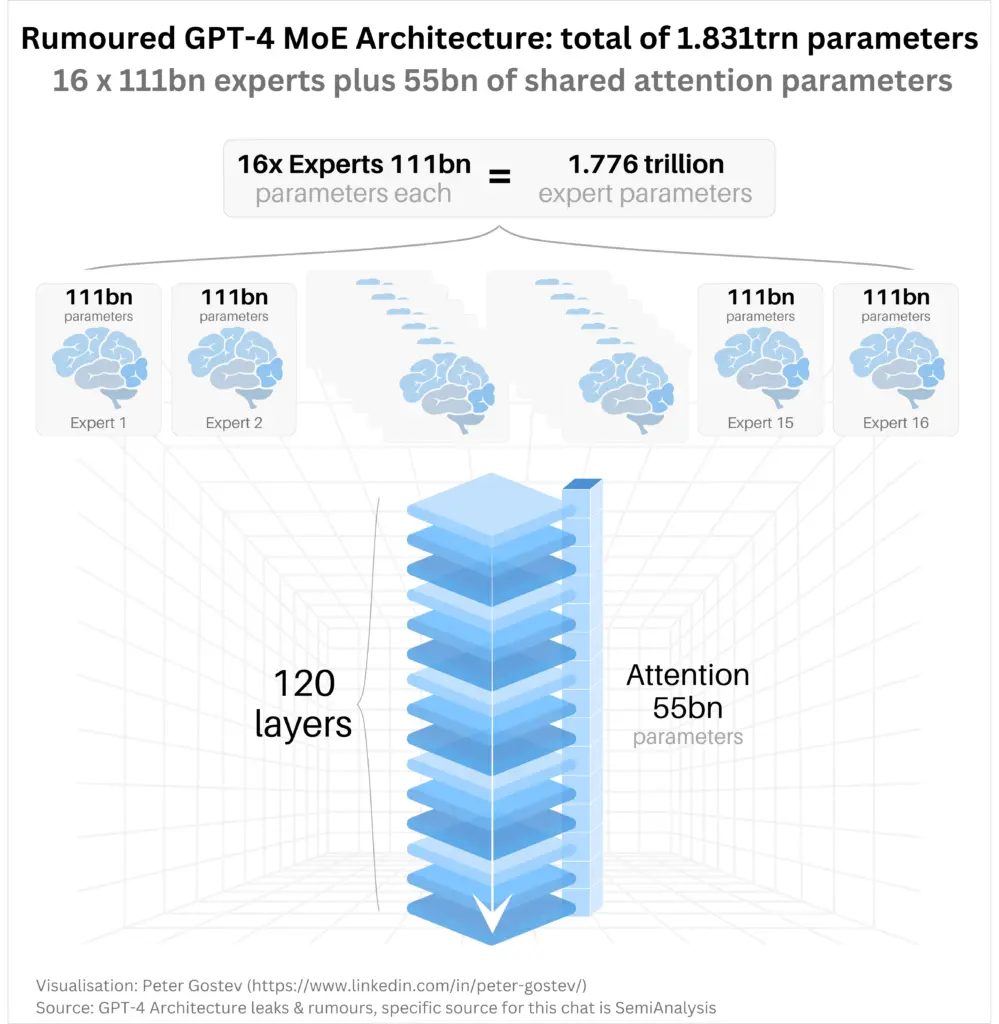

Thông tin chính được truyền tải là GPT-4 được cho là có tổng cộng khoảng 1,831 nghìn tỷ tham số.

Kiến trúc được mô tả là mô hình Mixture of Experts (MoE), với 16 thành phần chuyên gia, mỗi thành phần chứa 111 tỷ tham số. Kết hợp lại, 16 chuyên gia này chiếm 1,776 nghìn tỷ tham số, được gọi là “tham số chuyên gia”.

Ngoài ra, còn có một thành phần chú ý được chia sẻ với 55 tỷ tham số.

Hình ảnh trực quan hóa 16 thành phần chuyên gia dưới dạng các biểu tượng giống như bộ não, mỗi thành phần chứa 111 tỷ tham số. Các chuyên gia này được kết nối với một ngăn xếp trung tâm gồm 120 lớp, có thể đại diện cho độ sâu hoặc số lớp của mô hình.

Thành phần chú ý được chia sẻ với 55 tỷ tham số được mô tả như một ngăn xếp màu xanh lớn ở phía dưới, tương tác với các thành phần chuyên gia.

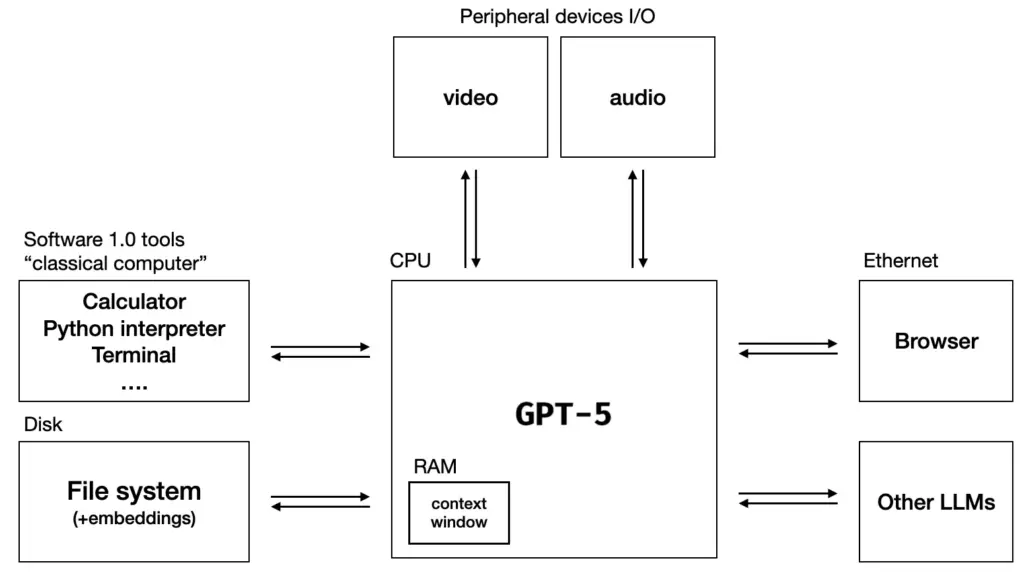

GPT-5 được “so sánh” với một hệ điều hành

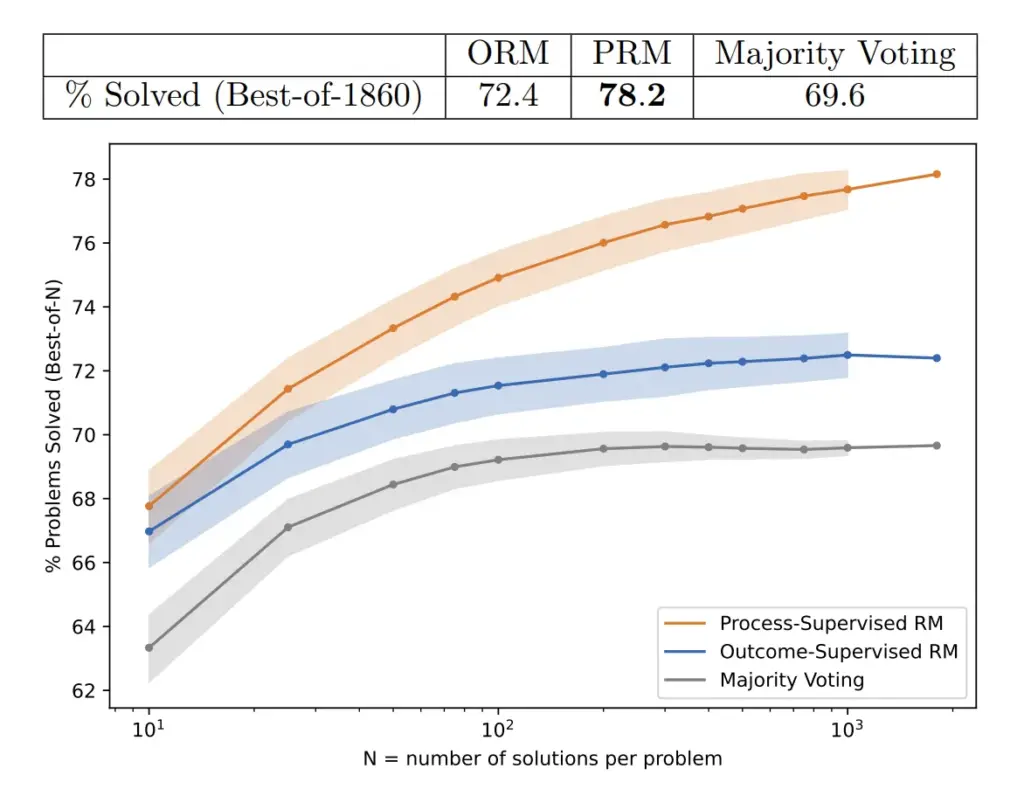

So sánh giữa các mô hình giám sát kết quả và các mô hình giám sát quy trình, được đánh giá thông qua khả năng tìm kiếm trên nhiều giải pháp kiểm tra.

Lấy mẫu mô hình hàng nghìn lần và chọn câu trả lời có các bước lý luận được đánh giá cao nhất đã tăng gấp đôi hiệu suất trong toán học và không, điều này không chỉ áp dụng cho toán học mà còn mang lại kết quả ấn tượng trong các lĩnh vực STEM.

GPT-5 cũng sẽ được đào tạo trên một lượng dữ liệu lớn hơn nhiều, cả về Khối lượng, Chất lượng và Tính đa dạng.

Bao gồm một lượng lớn dữ liệu Văn bản, Hình ảnh, Âm thanh và Video. Cũng như Dữ liệu và Lý luận Đa ngôn ngữ.

Điều này có nghĩa là Tính đa dạng trực quan sẽ được cải thiện hơn nữa trong năm nay trong khi Lý luận LLM bắt đầu phát triển.

Điều này sẽ làm cho GPT-5 linh hoạt hơn, giống như sử dụng LLM làm Hệ điều hành.

Năm 2024 sẽ là phiên bản rõ ràng hơn và có thể áp dụng thương mại hơn của các mô hình hiện có và mọi người sẽ ngạc nhiên khi thấy những mô hình này tốt đến mức nào.

Không ai thực sự biết các mô hình mới sẽ như thế nào. Chủ đề lớn nhất trong lịch sử Trí tuệ nhân tạo là nó luôn đầy bất ngờ.

Mỗi khi bạn nghĩ mình biết điều gì đó, bạn tăng nó lên 10 lần và cuối cùng bạn nhận ra mình không biết gì cả. Chúng ta với tư cách là loài người thực sự đang cùng nhau khám phá điều này.

Tuy nhiên, tiến trình chung trong LLM và Trí tuệ nhân tạo là một bước tiến tới AGI.

Trên đay là toàn bộ bài viết về GPT-5 của TentenAI, hãy theo dõi chúng tôi để cập nhật thêm những thông tin và tin tức về AI. Ngoài ra, bạn có thể tham khảo những công cụ AI mà chúng tôi đã phát triển.