What is GPT-3.5-turbo-1106?

GPT-3.5-turbo-1106 is a large language model (LLM) developed by OpenAI. Is an updated version of GPT-3.5-turbo, released in October 2023. GPT-3.5-turbo-1106 has several improvements over the previous version, including:

- Improved performance: GPT-3.5-turbo-1106 is faster and more accurate than GPT-3.5-turbo. li>

- Improved text generation capabilities: GPT-3.5-turbo-1106 can create more creative and engaging text GPT-3.5-turbo.

- Improved instruction tracing: GPT-3.5-turbo-1106 can understand and execute complex instructions more complex than GPT-3.5-turbo.

Compare GPT-3.5-turbo-1106 and GPT-3.5

| Performance | Faster and more accurate | Slower and less accurate |

| Text generation capabilities | More creative and attractive | Less creative and engaging |

| Ability to follow instructions | Can understand and carry out more complex instructions | Can understand and carry out simple instructions |

| Supports JSON mode | Yes | No |

| Supports parallel function calling | Yes | No |

| Improved information retrieval | Yes | No |

One of the most notable improvements of GPT-3.5-turbo-1106 is JSON mode support. JSON mode allows the model to create and understand JSON formats. This expands the model’s capabilities in applications such as data processing and automation.

Instructions to enable JSON mode in GPT-3.5-turbo-1106

To use JSON mode, it can be used in 2 ways.

1. Use directly on ChatGPT UI.

2. Call through ChatGPT’s API.

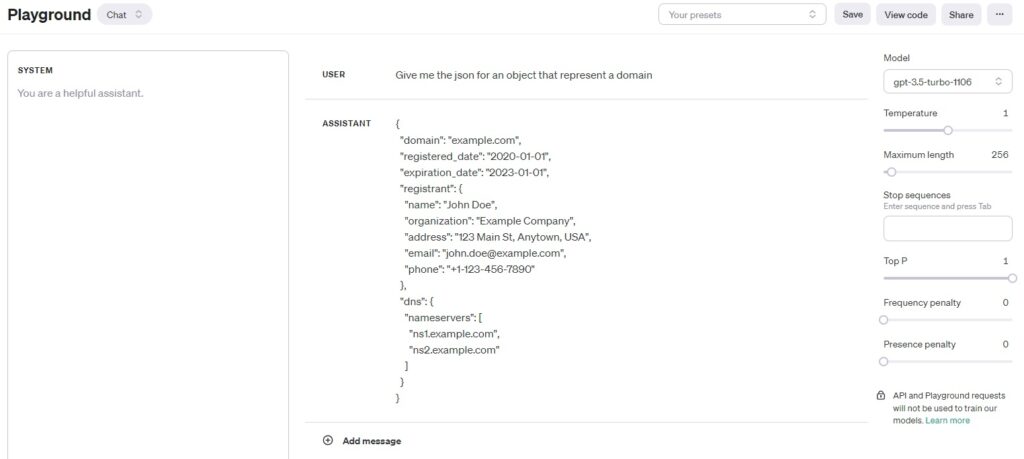

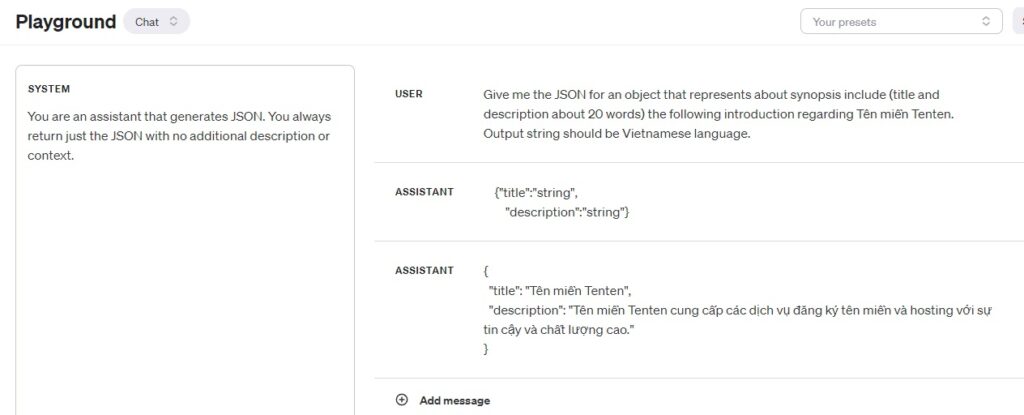

Using JSON mode on ChatGPT UI (Playground)

You can access it directly at the following link: https://platform.openai.com/playground?mode=chat&model=gpt-3.5-turbo-1106

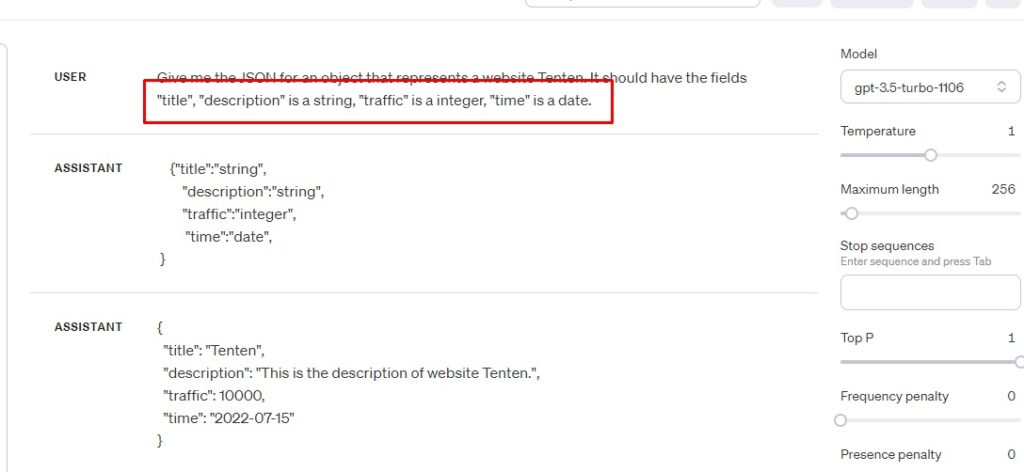

The structure of the user part would be: Give me the json for an object that represents (subject).

When the result returns in the correct JSON format, it means JSON mode is enabled.

However, to maintain the fixed structure of a JSON form that you need, you will have to combine both Assistant and System.

The Assistant will support what the JSON output format will look like. System and User will act as format prompts. As in the example above, we have a fixed JSON segment.

{ "title": "Tenten Domain", "description": "Tenten Domain provides domain registration and hosting services with reliability and high quality." }

However, this result is not 100% certain. The Assistant will essentially be based on the User’s content, so when writing a paragraph in the Assistant, make sure the same declaration is made in the User. Additional examples:

Use JSON mode via OpenAI

‘s API

Similar to use in the ChatGPT UI interface. ChatGPT needs data in a format that follows a reliable, consistent, and predictable schema.

Ideally you want to parse the response from ChatGPT in code and do something useful.

response = client.chat.completions.create(

model="gpt-3.5-turbo-1106",

timeout=10,

response_format=

{ "type": "json_object" },

messages=[

{

"role": "user",

"content": f"""Give me the JSON for an object that represents about synopsis include (title and description about 20 words) the following introduction regarding {query}.

Output string should be {language} language."""

},

{

"role": "assistant",

"content": """

{"title":"string",

"description":"string"}"""

},

{

"role": "system",

"content": "You are an assistant that generates JSON. You always return just the JSON with no additional description or context."

}

],

temperature=1,

max_tokens=1000,

top_p=1,

frequency_penalty=0,

presence_penalty=0

)

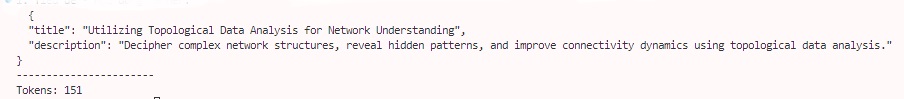

response1=f"{response.choices[0].message.content}"

print(response1)We get the returned result:

Additionally, you can refer to the following paragraph.

/ Use the openai package from npm to call ChatGPT

import OpenAI from "openai";

// Create a new instance of the openai client with our API key

const openai = new OpenAI({ apiKey: process.env.OPENAI_KEY });

// Call ChatGPT's completions endpoint and ask for some JSON

const gptResponse = await openai.chat.completions.create({

model: "gpt-3.5-turbo",

temperature: 1,

messages: [

{

role: "user",

content: "Give me the JSON for an object that represents a cat."

}

],

});

// Attempt to read the response as JSON,

// Will most likely fail with a SyntaxError...

const json = JSON.parse(gptResponse.choices[0].message.content);But this will only work if the gptResponse.choices[0].message.contentJSON is valid every time.

Therefore, the JSON format can be given with type:

type Cat = {

name: string,

colour: "brown" | "grey" | "black",

age: number

}

// Read the response JSON and type it to our Cat object schema

const json = <Cat>JSON.parse(gptResponse.choices[0].message.content);However, ChatGPT cannot be relied upon to return valid JSON in a predictable format. There are many errors that can cause errors in the app in use, especially when consistency is key.

This becomes a real problem when you write code that relies on real-time feedback from ChatGPT to trigger specific actions or updates.

Good luck!