What is GPT-4o?

Expands the capabilities of free ChatGPT and launches GPT-4o – a new step towards natural human-computer interaction.

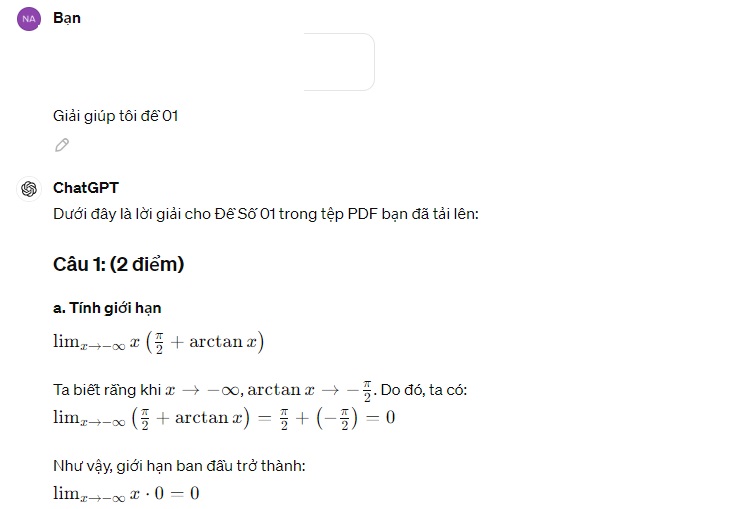

GPT-4o (“o” means “omni (omni)”) can accept as input a combination of text, sound, and images, and produce output in the form of text. text, sound and images.

In just 232 milliseconds (average 320 milliseconds), equivalent to the response time of a human in conversation, the GPT-4o can respond to audio inputs.

Regarding the ability to process English text and code, GPT-4o is on par with the GPT-4 Turbo version, but has outstanding advantages in processing text in languages other than English.

Besides, GPT-4o also works faster and saves costs by more than 50% in API. In particular, GPT-4o is able to understand images and sounds much better than existing models.

Related articles: GPT-4o – OpenAI‘s new “delicious, nutritious, and cheap” model is launched

“Multipurpose” model

OpenAI has launched GPT-4o, a new leading language model capable of real-time multimodal reasoning across audio, images, and text. Before GPT-4o, users could use voice mode to chat with ChatGPT with an average latency of 2.8 seconds (GPT-3.5) and 5.4 seconds (GPT-4).

To achieve this, speech mode is a sequence of three separate models: a simple model that transcribes audio to text, GPT -3.5 or GPT-4 takes in text and outputs it, and a third simple model converts that text back into audio.

This process causes the main intelligence source, GPT-4, to lose a lot of information – it cannot directly observe tones, multiple speakers or background noise, and it also cannot produce laughter or singing. or express emotions.

With GPT-4o, OpenAI trained a single new model on all text, images, and audio, that is, all inputs and the output are all processed by the same neural network. Since GPT-4o is OpenAI’s first model to combine all of these methods, they are still in the phase of exploring the model’s capabilities and limitations.

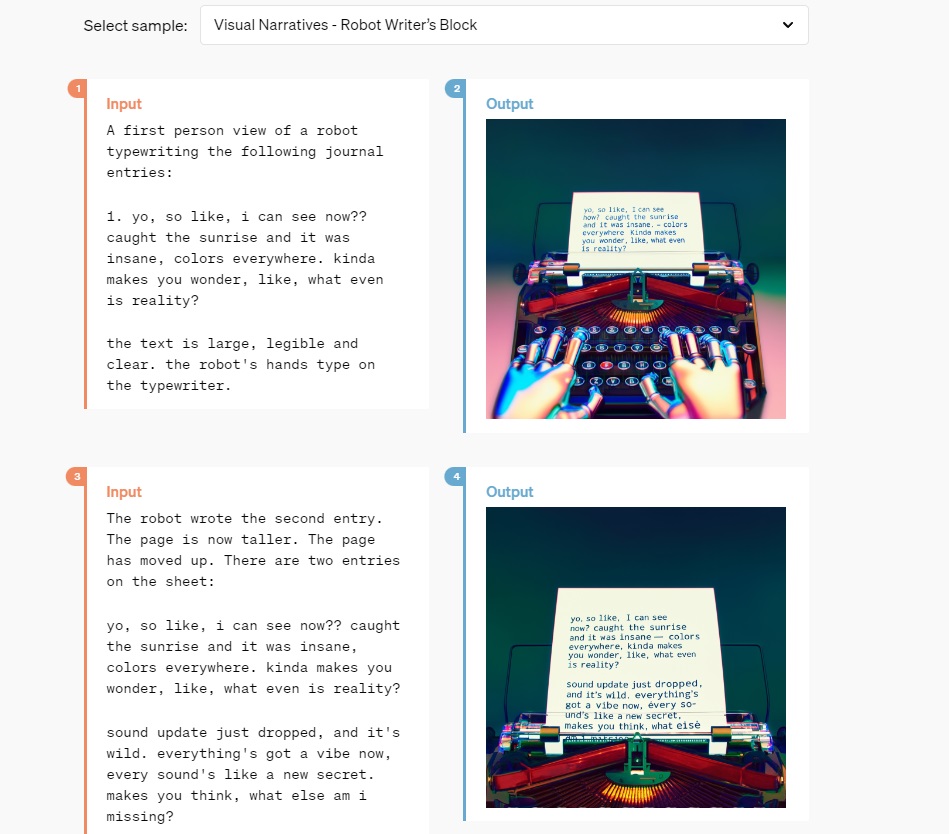

Some applications of GPT-4o:

- Sally the mailwoman

- Poster creation for the movie “Detective”

- Character design – Geary the robot

- Poetic typography with iterative editing 1

- Poetic typography with iterative editing 2

- Commemorative coin design for GPT-40

- Photo to caricature

- Text to font

- 3D object synthesis

- Brand placement – logo on coaster

- Poetic typography

- Multiline rendering – robot texting

- Meeting notes with multiple speakers

- Lecture summarization

- Variable binding – cube stacking

- Concrete poetry

Evaluate the model

Based on traditional benchmarks, GPT-4o achieves comparable performance to GPT-4 Turbo in text processing, reasoning, and intellectual programming.

At the same time, it also sets new records for multilingual capabilities, audio processing and vision.

Word separation

These 20 languages were chosen to represent the compression capabilities of the new word separator across different language families.

| Language | Number of tokens | Decreased compared to English |

|---|---|---|

| Gujarati | 33 | 4.4x |

| Telugu | 45 | 3.5x |

| Tamil | 35 | 3.3x |

| Marathi | 33 | 2.9x |

| Hindi | 31 | 2.9x |

| Urdu | 33 | 2.5x |

| Arabic | 26 | 2.0x |

| Persian | 32 | 1.9x |

| Russian | 23 | 1.7x |

| Korean | 27 | 1.7x |

| Vietnamese | 30 | 1.5x |

| Chinese | 24 | 1.4x |

| Japanese | 26 | 1.4x |

| Turkish | 30 | 1.3x |

| Italian | 28 | 1.2x |

| German | 29 | 1.2x |

| Spanish | 26 | 1.1x |

| Portuguese | 27 | 1.1x |

| French | 28 | 1.1x |

| English | 24 | – |

GPT-4o “free” user guide

First, you access: https://chat.openai.com/ –> proceed to login.

When you enter an example, after finishing, the model selection section will appear, select GPT-4o.

Note: GPT-4o will be free, but will be limited if you use it more than the allowed number of times.

Conduct testing.

Price

GPT-4o is the most advanced multimodal model, faster and cheaper than GPT-4 Turbo with stronger visual capabilities.</p >

Model with 128K context enables output based on October 2023 knowledge.

| Model | Input | Output |

|---|---|---|

| gpt-40 | $0.005/1K tokens | $0.015/1K tokens |

| gpt-40-2024-05-13 | $0.005/1K tokens | $0.015/1K tokens |

Summary

GPT4o has improved significantly compared to GPT-3.5, so you need some notes:

-

- Read & understand images directly.

- The price is cheaper than 1/2 of the GPT4 model.

- Speed improved 2 times GPT4 model.

- Free to use, but will be limited if used too much at a time.

-

- Proficient in all four skills of listening, speaking, reading, writing and ability to reason

In short, GPT4o has been greatly improved compared to its predecessor. Understanding and using Chat GPT today is a must to keep up with work. Therefore, tentenai.vn always provides you with useful information about AI, the hottest news to help you keep up. In addition, we also have AI tools that have been launched, this is to serve our passion for AI technology and support people.